Configure a Program

A program is a way to group tests that share reporting features and visibility settings. This allows you to compare by content area and grade, and track student performance over time. A test must be assigned to a program to be available in the Performance Report.

In most cases, your program delivery team will configure programs after working with site administrators to determine reporting requirements.

Step-by-Step

- Select Test Management, then select Program Configuration.

- To create a program, you can:

- Select the Create New to set up a new program

- Select the Clone icon in the Actions column to duplicate an existing program and modify as needed

Reference the expandable sections below to configure the new program.

After creating a program...

You can assign tests to it when creating a test from Test Management > Tests.

After you assign a test to a program, the program's score type selections, windows, grade levels, and content areas cannot be changed. Make sure to carefully plan and configure the program before assigning tests.

You can override some program settings at the test level. For Performance Levels, cut scores and labels can differ by test, but you cannot change which levels count as proficient, the color, or add/remove levels.

General Settings, such as whether or not to display item analysis, ISR Settings can be modified later, even when students are taking tests.

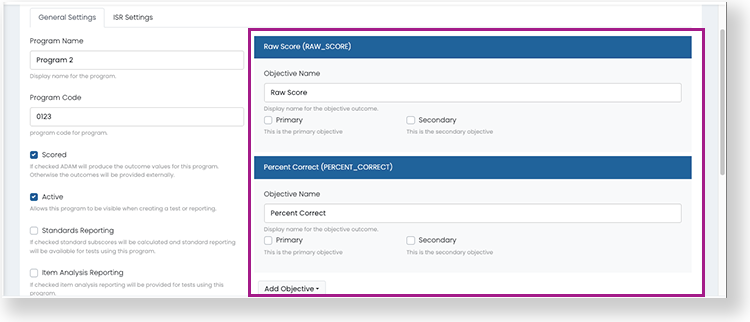

Complete initial program setup on the General Settings tab. Define when, who, and what the program is used for.

You must define a primary performance objective before you can save data in General Settings.

Program Name: Required. This name will be used when selecting a program in the performance reports, so it should be easy to recognize.

Program Code: Optional. Not currently used or displayed on reports.

ADAM Scored: Select for tests delivered through the ADAM player. Leave this checkbox unchecked for tests delivered externally (such as through TestNav). A program cannot contain both ADAM and external tests.

Active: Select to make the program available when assigning a test to a program and in reporting. You can leave this unchecked until you are ready to use the program.

Class Reports: Select to allow users to access class-level reports in My Classes and Reporting for tests in the program.

- Student Results: Show combined view of all student results.

Program Report: Program Reports compare results from different tests in the same program and support reporting by demographics.

Standards Reporting: Standard sub-scores will be calculated and standard reporting will be available to teachers and administrators. For ADAM-delivered test only.

Item Analysis Reporting: Item analysis reporting will be available to teachers and administrators. For ADAM-delivered test only.

- Show item content, answers and student responses

- Show trait level reporting: Available for open response items

Hide aligned items details: If checked, aligned item details will not be visible on Standard Performance report.

Show Student Their Results: Allows students to see their results in ADAM. For ADAM-delivered test only.

Show Student Item View: Allows students to see the test items in ADAM. For ADAM-delivered test only.

Review Only: If checked only Program Reviewers will see this program.

Available Testing Windows: Required. Enter one or more window names (such as Fall, Spring). These are simply labels to facilitate reporting over time and do not control when the student can test.

Available Grades: Required. Select one or more grade levels to use in the program.

Available Content Areas: Required. Select one or more content areas used in the program, such as Algebra or ELA (these are defined in System > Client Settings). Content areas enable you to have multiple assessments for the same subject and area (e.g., Algebra II and Geometry).

School Year

On the right side of the screen, define the performance objectives that you want to appear in reports, such as raw score. The available objectives will vary based on whether the Scored checkbox is selected in General Settings. Raw Score and Percent Correct objectives appear by default.

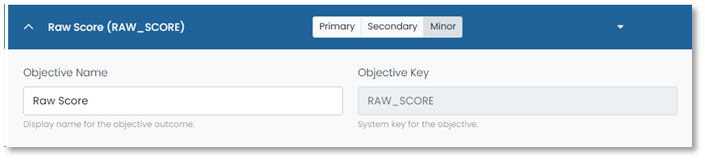

The objective configuration is a template that can be changed at the test level. For example, if you define cut scores here, they can be changed on individual tests when assigning them to the program.Raw Score (RAW_SCORE): Always available. Reports show raw score if selected as a primary or secondary objective.

Percent Correct (PERCENT_CORRECT): Always available. Reports show percent correct if selected as a primary or secondary objective.

Additional Objectives

Select from the Add Objective dropdown to add additional objectives. You can rename objectives in the Objective Name field.

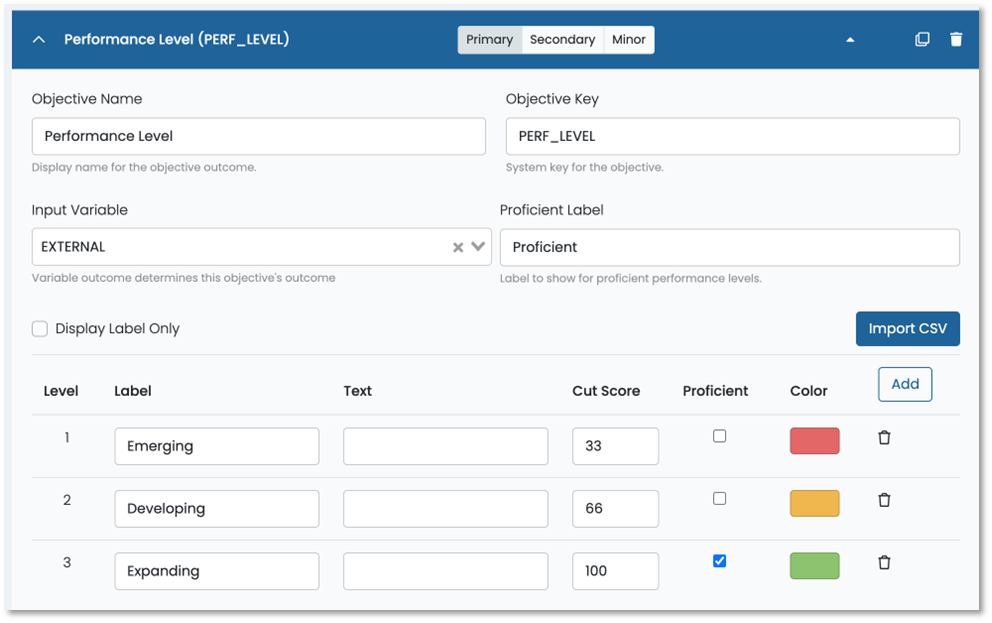

Performance Level (PERF_LEVEL):

- Usually set as the primary objective when used.

- Input Variable: Select RAW_SCORE or PERCENT_CORRECT (or another input based on an objective you have created). This value will be used to determine the output score.

- Performance Level Settings: Enter the Label, Text description (such as "Above expectations"), and Cut Score.

- Check the Proficient box when applicable for the performance level. Levels tagged as Proficient are used to calculated the Proficiency value in Performance report

- Optionally, select new colors by selecting an existing color. Select Add to create more levels.

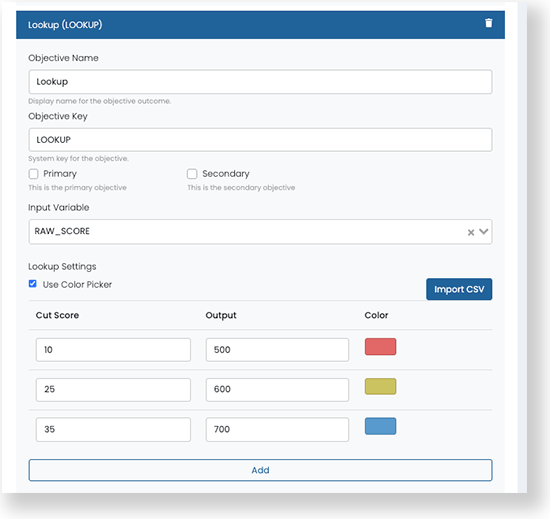

Lookup (LOOKUP):

- Enter a database key in the Objective Key field, such as "LOOKUP_Scale." This field CANNOT be blank.

- Input Variable: Select RAW_SCORE or PERCENT_CORRECT (or another input based on objectives you have created). This value will be used to determine the output score.

- Lookup Settings: Enter data in the Cut Score and Output fields. You can also import a CSV file.

- The cut score starts at zero, so the lowest value you enter can be greater than zero, but you may use zero if you define a cut score for every raw score.

- Optionally, select the Use Color Picker checkbox to customize the colors of the score groups. Select Add to create more levels.

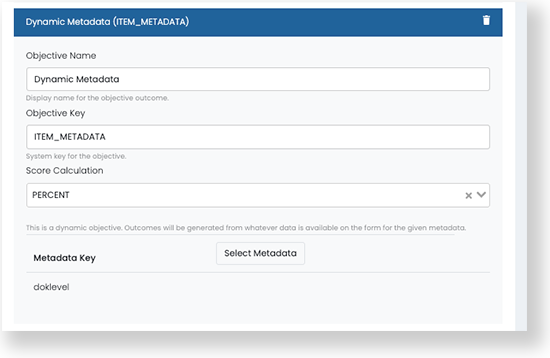

Dynamic Metadata (ITEM_METADATA):

- Enter a database key in the Objective Key field, such as "DYNAMIC_METADATA." This field CANNOT be blank.

- Score Calculation: Select NUMBER or PERCENT.

- Select Metadata: Select a Bank, then select a Metadata Field.

- You can only select one Metadata Field for each Dynamic Metadata objective.

- You can only select one Metadata Field for each Dynamic Metadata objective.

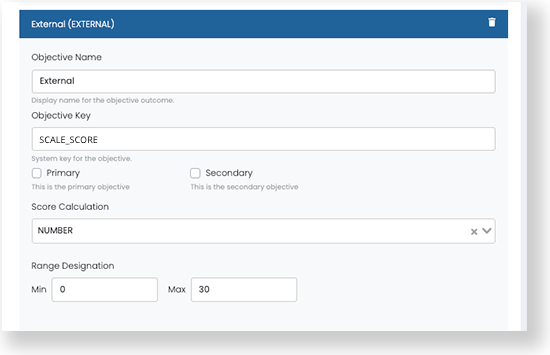

External (EXTERNAL):

- Only available when the Scored checkbox is NOT selected in General Settings (i.e., tests are delivered in TestNav).

- Objective Key must match the external passed data, for example "SCALE_SCORE."

- Score Calculation: Select NUMBER or PERCENT.

- Range Designation: Enter the default low and high scaled scores or number of questions, with 0 as minimum.

- The range for each test can be defined later when tests are added to the program.

- The range for each test can be defined later when tests are added to the program.

Primary, Secondary and Minor Objectives

A program must have a primary performance objective, and optionally, secondary and minor objectives. The primary objective is the main focus of the report and will be emphasized on the report. Secondary and minor objectives will also be visible but not as prominent.

For example, the primary objective may be Performance Level and the secondary objective may be Raw Score Percent Correct. Performance reports will emphasize score groups (with colors) defined in the Performance Level objective, while the secondary objective provides the score used to determine the performance levels. You may define additional objectives that feed data to the primary or secondary objectives shown on the reports.

For each objective, select Primary, Secondary or Minor.

Objective Sorting

The Outcome columns are displayed in the report based on the sequence of the configured objectives. The sequence can be modified even after tests are linked to the program.

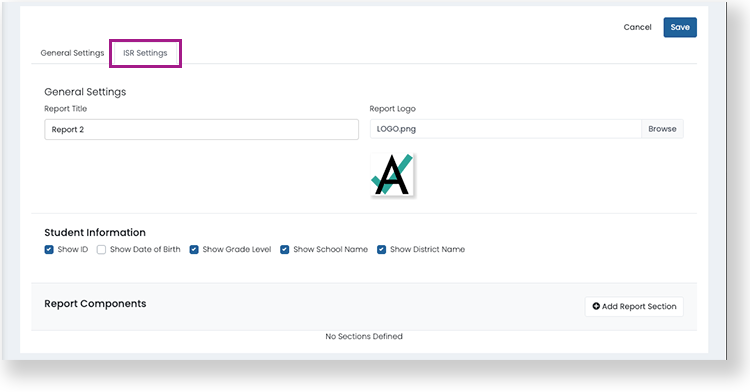

You can set up the Individual Student Results report on the ISR Settings tab. These settings can be modified at any time, even during test taking. The ISR report can be accessed from the Performance Report.

- Report Title: Enter a name for the report.

- Report Logo: Optional. Select a logo by browsing or drag and drop a file. JPG, PNG, and GIF are supported.

- Student Information:

- Show ID: Show the student ID on the report.

- Show Date of Birth: Show the student's date of birth on the report.

- Show Grade Level: Show the student's grade level on the report.

- Show School Name: Show the student's school name on the report.

- Show District Name: Show the student's district on the report.

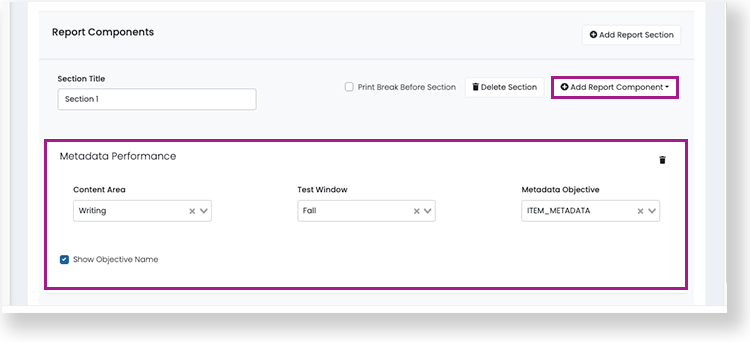

Report Components

After defining the general settings, you can select and configure each section you want to include on the ISR.

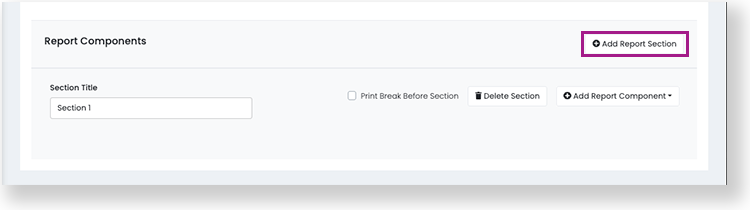

Add Report Section: Select to add a report section. Each section may contain one or more report components.

- Section Title: Enter a name for the report section.

- Add Report Component: Select to add a report component to a section. You can add several report components to a section.

- For each component, select a single Content Area, one or more Test Windows, and an Objective (database field).

- For each component, select a single Content Area, one or more Test Windows, and an Objective (database field).

If data does not exist for an ISR component you have defined, it does not appear on the report.You can add the following report components to the ISR.

- External Named: Only supports external data (currently TestNav). The External Objective is from TestNav data, such as scaled score.

- Growth: Shows a graph with performance over time. For performance levels, score groups appear as background colors on the chart. Supports both ADAM and external data from TestNav.

- Metadata Performance: Similar to the Growth report but using a grouping of items, such as reading comprehension or writing ability. Supports ADAM data only.

- Outcome Table: Only supports external data from TestNav. You can build a table with up to two columns and as many rows as needed. It is possible to report a range value with in the outcome table widget. When a lower and upper bound are configured, ADAM allows for a range to be reported on the Individual Student Report.

- Score and Performance: Shows the scaled score and a bar with colors for each performance level. Select the checkboxes to show test information, score group summary statements, and school and district averages. Supports ADAM data only.

- Score with Bar: Only supports external data from TestNav. Similar to the Score and Performance component but with an objective from TestNav data.

- Simple Level Output: Select a Performance Level Objective. Supports ADAM data only.

- Text: Use this component to share text information, including links. Enter a heading and text or links in the content area.

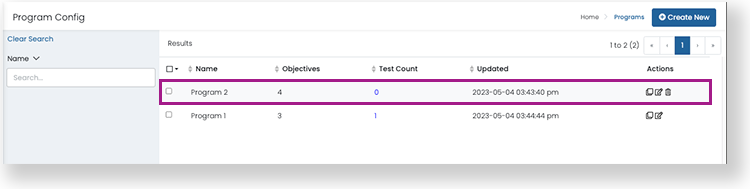

- Select Save. The program appears on the Program Config page.

Tiny links: https://support.assessment.pearson.com/x/RQUgBg